Get Ready for Large Action Models in Banking

You engage an app and speak your mind about a financial task. You are agnostic about which one of your many relationships accomplishes the task, only that the task gets done. Where traditionally, you might use an interface such as Siri or Alexa to pay your Verizon bill on time, now you tell your virtual assistant to “Reduce expenses by 10%, increase earnings by 10%, and put the difference into a savings account to result in more than $1 million in 10 years.” This is now doable today with generative AI and the rise of “large action models.” It is about to become the realm of banks.

What is a Large Action Model?

While you may be familiar with “large language models” (LLMs), such as those that power ChatGPT or banking applications like our “Tate” (HERE), a large action model (“LAM”) turns written or spoken intentions into action. LAM applications like Rabbit AI will soon usher in the next generation of apps and devices, putting LAMs into the hands of businesses and consumers. What LLMs were in 2023, LAMs will be as big in 2024. Humans, or machines, show a LAM a given workflow, and the LAM turns it into action by optimizing the intent and process.

It is important to note that there is no typing into search engines, no recording workflows or clicks, no using separate apps on the smartphone and no programming. Where applications like AutoGPT and others leverage an existing set of APIs or pre-designed integrations, LAM interfaces with the existing user interfaces of applications just as humans do. Like humans, LAMs “read” the graphics and the code of a website or application to create its own workflow and complete a given task.

More to this point, because the LAM can understand the complete user interface, it can learn an entire workflow and then reorder it to optimize the collection of information and inputs. If the information is already stored or available in another application, then it will pull it from that application instead of asking the user.

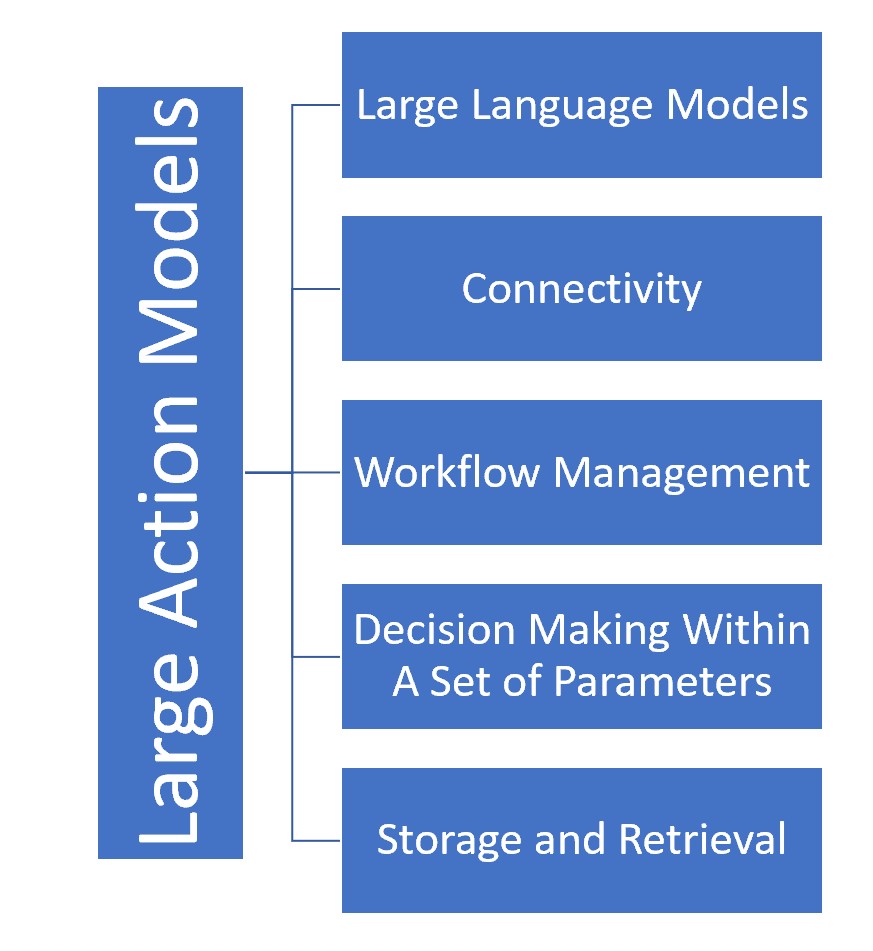

To recap, a LAM can do the following items:

- Connections: Connect various applications and APIs.

- Multimodal Learning Models: Uses “neuro-symbolic programming” to understand the user interface and API requirements and create a customized workflow. The LAM uses a “multimodal model” to look at an application’s HTML code and graphic elements to deduce the required workflow and inputs. The LAM automatically adapts if you change the workflow, user interface, or API. This is like having an intelligent robotics process automation application (RPA) that is personalized and has unlimited flexibility.

- Instruction Abstraction: The LAM takes a series of high-level instructions learned from an interface and creates an advanced workflow to connect multiple applications seamlessly.

- Direct Human Modeling: If a LAM cannot learn from its multimodal learning, LAMs can observe human interactions with applications to help the LAM create a “recipe” or template for completing a task. It can then store these tasks to use again later.

- Task Reasoning: A LAM sequences instructions together to try to accomplish a goal. If instructions or data are not immediately available, the applications reason how to get the information most efficiently – either using other applications or asking the user. This is to say that not only do large action models understand each step in the process, but they also understand why each step occurs in its sequence. This allows the LAM to optimize workflow and application interaction.

- LLMs: Uses large language models (LLM) to communicate with humans and to understand content, such as a website, to learn how to complete a task. Having LLM access also helps manage pre and post-processing prompts by giving LAMs contexts, filtering answers, and cross-checking answers or actions to improve accuracy.

- Continuous Learning: Unlike fixed programming, LAMs learn with each interaction while testing if the workflow can be more efficient. This constant improvement creates an evolutionary pattern, allowing the LAM to improve without human programming.

- T2S & S2T: Allows for text-to-speech and speech-to-text

A Banking Example of a Large Action Model

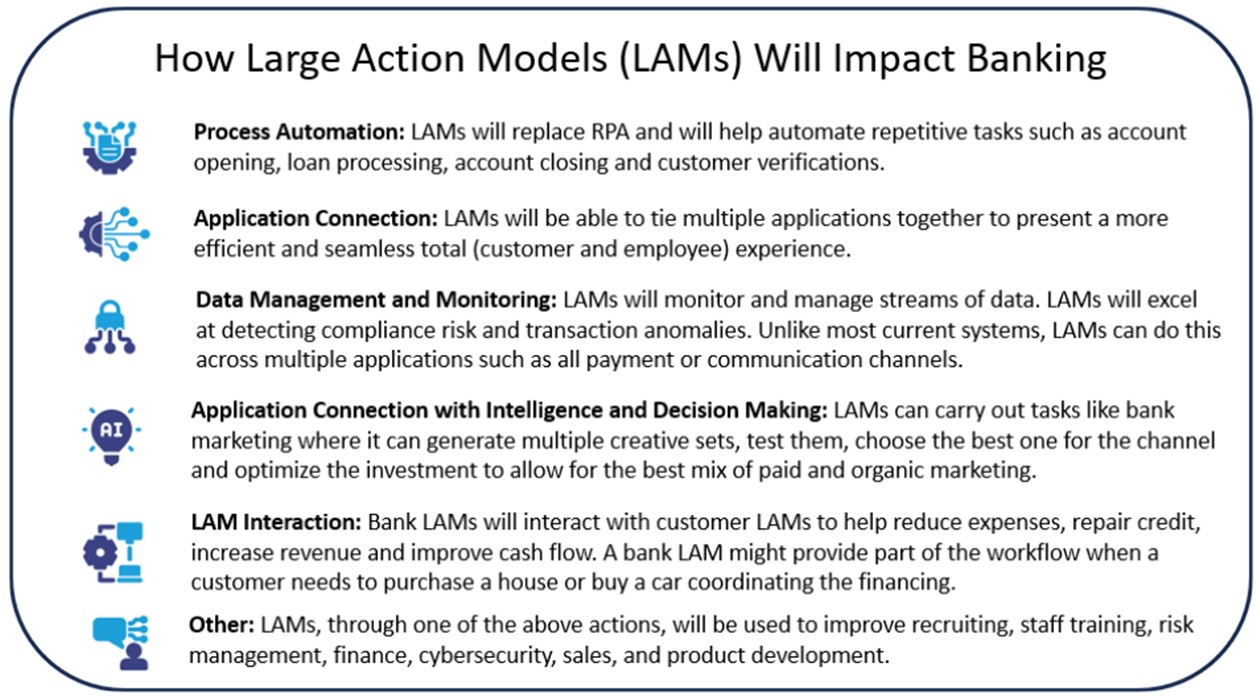

LAMs impact banks in two ways. One way is that the consumer or business will have its own set of LAMs, and the bank will interact with these agents. Two, the bank will have its LAMs to assist the customer.

Over time, banks will have to cater to LAMs. In effect, banks will have an additional customer segment. A consumer or business will have their “digital twin,” this LAM agent, who will carry out tasks on their behalf. For security reasons, banks will start to create “companion accounts” that will give the large action models particular access that the user controls to include a set of limits. The LAM will act as an agent for the user, similar to how a user may provide a power of attorney to a trustee or guardian.

Of course, these LAMs don’t care about the aesthetics of a workflow or website, so information and requests for data will be presented in more code-based scripts, making it more efficient for these LAM agents to interact with a bank. Banks will present a “LAM view” of workflow.

For example, the LAM will be secure and will have access to a user’s driver’s license, photo, tax records, and W-2s. The bank will know that it’s a LAM login for an individual, authenticate, and then provide access to streamlined workflows. Should the user want to open an account, it will have all the information at the ready, reducing the current five-minute digital process to seconds.

Then, there are LAMs controlled by the bank.

LAMs will make banks more efficient. At present, a bank presents multiple user interfaces and applications to its customers. It may have one for commercial customers, another interface for small business customers, and still another for retail customers. Some banks also have other interfaces to handle specific products or customer segments. A bank may also have an API to allow customers or vendors to enable certain products or processes. Each interface or API has certain rules, requirements, and workflow that are fixed. If one interface changes, banks need to redesign their workflow.

Large banks, for example, get frustrated with the various applications they need to service the customer, all with multiple user experiences, data syntax, workflow, and requirements. As a result, banks often create a “front end” or an additional user-facing application to standardize the experience. They will then usually make “middleware” to handle the connections of applications. This architecture is rigid and requires much effort.

Large action models handle the task differently. A LAM can analyze both the user interface and the API to determine the best path for the customer. The LAM learns what information is required and then asks the user with the best “guess” based on past usage. LAMs think more like humans than programmed applications and can understand the intent of the user. As such, banks will use LAMs to tie multiple applications together.

For example, a bank may have a LAM that moves whole relationships over and can open a set of accounts and multiple products. For instance, if a small business wants to move the accounts of the owners, the company, and the employees to a new bank, a bank’s LAM can be specialized enough to do this efficiently and at scale.

A bank will have a LAM that will help refinance a mortgage at another institution and another LAM that specializes in improving a household’s or business’s cash flow.

If an application, such as a bank’s core, changes an interface or technical aspect of its API, there is no problem as the LAM adapts instantly. Bank architecture will become more flexible by using large action models. LAMs will be combined with modern cores to create an endless supply of personalized products, all within a bank’s parameters and risk tolerance. LAMs will help borrowers customize the terms and conditions of their loans, all while helping both the bank and the borrower complete the loan process and onboard the loan.

LAMs also will be used internally. Separate LAMs specializing in security, for example, look for application vulnerabilities and help solve network and application weaknesses. Other LAMs will handle compliance, risk management, and operations.

When the trend stabilizes, LAMs are predicted to increase banker productivity by a factor of ten.

Putting This Into Action

Large action models combine the fluency of natural language with a task-oriented agent that seeks to satisfy a goal efficiently and could connect multiple applications. LAMs will be designed to do these tasks in safe, secure, and compliant ways and will usher in a new era of applications that will make households and organizations more efficient and accurate.

Bank architecture and application development will soon head in a completely new direction along with almost every facet of the bank. LAMs will reduce the need for banks to provide extensive user interfaces thereby speeding development and deployment of applications and products.

In addition, look for LAMs to replace many traditional robotics process automation tools within banks. These intelligent agents will be faster to develop than RPA bots, be intelligent with decision-making capabilities, and be more versatile.

In the next several months, look for large action models to become more plentiful. Once they do, bank management will want to put these models on their radar screen to track and decide when the right time is to experiment and test. In parallel, like banks had to create new committees and governance for AI and generative AI, LAMs will require upgrades to those governance structures, policies, and procedures.

Just as the internet spawned millions of new applications, large language models are in process of doing the same. Large action models are just one of the many offshoots of that trend but one that is likely to have an outsized impact on banking.