ChatGPT 3.5 Turbo – The Risks and Ethics for a Bank Enterprise Application

Last week, we convened a group of 30 bankers to train on ChatGPT and ideate around new banking products related to the enterprise version. While we covered the 15 ways we use ChatGPT HERE to enable banking productivity, this class focused on productizing the application for banking needs. Since our last article, several companies, most notably JP Morgan Chase, Amazon, Verizon, and Accenture, have limited their staff’s interaction with the application. We addressed this in class and discussed the risks, opportunities, and capabilities of Open AI’s newest enterprise release ChatGPT 3.5 Turbo. In this article, we present the highlights from that presentation.

The Release of ChatGPT 3.5 Turbo and Whisper AI

The class was timely as OpenAI announced the public beta release of the underlying model that runs ChatGPT called “GPT-3.5-Turbo.” The new model is 10x cheaper for banks, faster, better at math, and allows banks to connect with the engine via API. Microsoft, Snap, Shopify, Instacart, and others announced new products based on the new release.

Along with ChatGPT 3. 5 Turbo, Open AI also released “Whisper AI 2.0,” allowing banks to utilize ChatGPT with upgraded speech-to-text capabilities, including understanding multiple languages.

To be clear, the previous model of ChatGPT, called “Davinci-003,” may still have its place at banks, particularly for pure chat. Still, for scale and certain functions such as financial and data analysis, ChatGPT 3.5 turbo has superior attributes for accuracy, efficacy, and cost.

The Banning of ChatGPT

Last week also saw a handful of companies banning their staff from using ChatGPT, citing compliance concerns over using third-party software. While no company cited a particular issue, the risk has been characterized as having to do with employees including personal or company information within their prompts.

While we are not sure the use of ChatGPT differs from allowing employees access to the many tools on the internet, such as Google, Apple, or others, we wanted to explore the risk from various viewpoints.

How to Conceptualize and Explain ChatGPT 3.5 Turbo Within a Risk Framework

Talk about artificial intelligence (AI), “generative AI,” or “large language models” to a banker, and the concept quickly gets lost. The knee-jerk reaction is analogizing to HAL 9000 and assuming that the model will have nefarious intent set on taking your job, information, spouse, and kids. As scary good as the output of ChatGPT is, the fear is misplaced.

The general model of ChatGPT is basically a sophisticated auto-complete function not that dissimilar to what your phone or what Google does when you type something in. Type a prompt into ChatGPT, and it searches its training data (information on the internet up to 2021), matches patterns and then generates your answer based on these recognized patterns.

The takeaway is that if you fear the information that it returns, you should have already been scared, as that information and pattern had already existed on the internet before you asked the question. All ChatGPT did was find that information, match a known pattern of information output and return the information.

The user can influence the output based on how they write the prompts or systemically during programming. By utilizing “Chat Mark Up Language” or “ChatML,” bank developers can refine any answers by providing “shots,” which is basically an example in which to refine learning further. By providing one, two, or many shots, banks can control both the information and the format of the output.

By using shots through ChatML, banks can better tailor and filter the responses preventing “prompt attacks” where customers could evoke racist, non-sensical, malicious, or plain wrong responses. Combined with upgraded ChatGPT models at a faster frequency, these shots are a huge step forward to preventing bank customers from utilizing the bank’s chatbot to generate phishing emails, malware, or other risks previously discussed in the press.

Thus, by controlling the training data and controlling the shots, banks can gain high confidence in the output generated by the application mitigating much of the perceived risk.

The Construct of Memory and the Storage of User PII Data

Another common fear among bankers is that ChatGPT stores your information, most importantly, personally identifiable information (PII). This is also misplaced. While PII should never be used on the internet, having an enterprise version of ChatGPT allows banks to handle personal information as they currently do.

ChatGPT does not store any information ingested from the prompts. It only “learns” from the customer’s previous question and output, as bank developers can feed the previous question back into ChatGPT. Hence, it appears to be learning and refining the output. At the end of each customer session, the memory is clean, thus preventing the storage of PII. Banks can choose to store the output within their own network, but that would be the same risk profile as banks currently have now.

Going Internal Then External with ChatGPT 3.5 Turbo

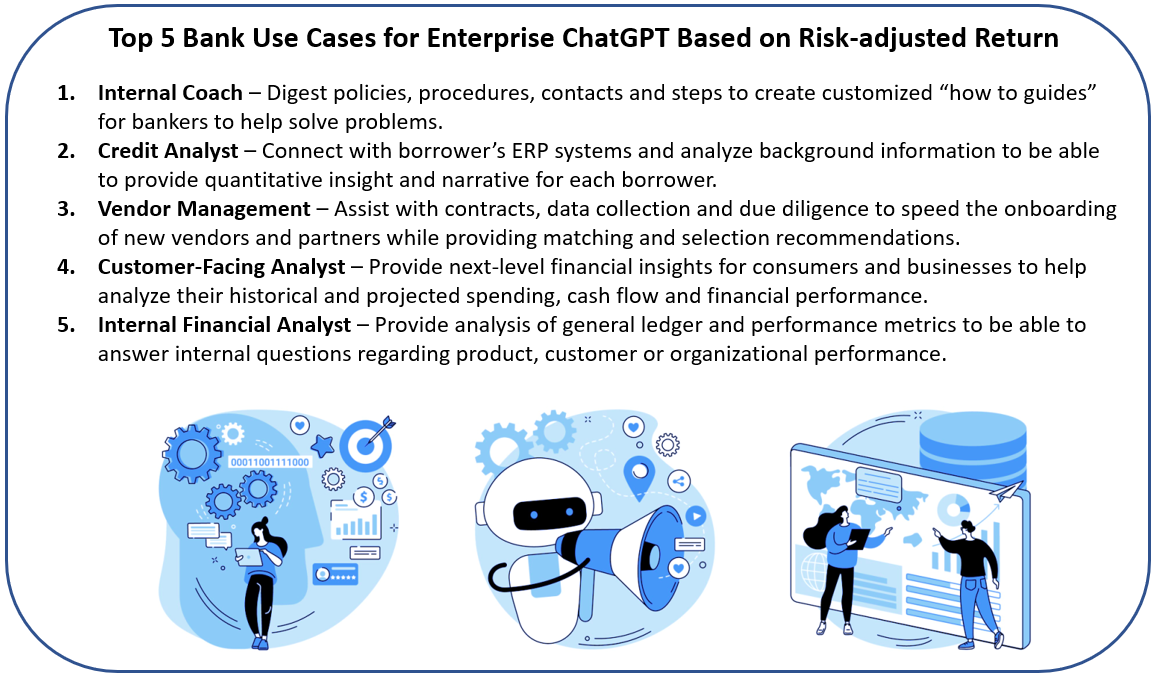

To educate the bank and prove the concept of utilizing a generative AI tool such as ChatGPT, it helps to start internally and then use the tool for customer-facing purposes. Because the average and worst cast risk is so much less using employees to test, the risk-adjusted return is more significant.

As such, most bank ChatGPT 3.5 Turbo projects start with saving employee resources, primarily time, to become more productive while providing a better customer experience. In this manner, the average and maximum potential negative impacts are substantially curtailed.

The Ethics of the Use of ChatGPT 3.5 Turbo

Then, there is the question of ethics. While a faction of us at the bank are active users of the tool to help with memos, marketing messages, employee reviews, and all the things we previously discussed in our 15 top uses article, some fellow bankers get upset when they find out they “fell for it,” many choosing to criticize us for a variety of reasons.

This is often the reaction to any new technology. When spell check went mainstream in the early 80s, many teachers felt it was “cheating” and would make students lazy. No one is worried about it now. Nor are they worried about the utilization of search engines, auto-correct or virtual assistance. The reality is that each of these tools makes us better. Generative AI will do the same.

The reality is that most bankers never use 100% of the output and materially change the AI-generated work product making it substantially their own both ethically and under the rules of patentable intellectual property. ChatGPT 3.5 Turbo and its predecessors have written their first commercially viable screenplay and academic research paper.

The reality is, like fire, generative AI can be dangerous or bring us enrichment. Technology is neither good nor evil; it just exists. Humans have always adapted and moved forward. Generative AI will present the same opportunity. While some of us will need to be retrained, there has never been a technological advance that has resulted in fewer humans employed in the long run – fire, the wheel, printing press, light bulb, combine harvester, refrigerator, engine, airplane, computer, internet, and robots, – all created tremendous economic growth. Generative AI will do the same.

Detecting ChatGPT Text – If That Is Your Thing

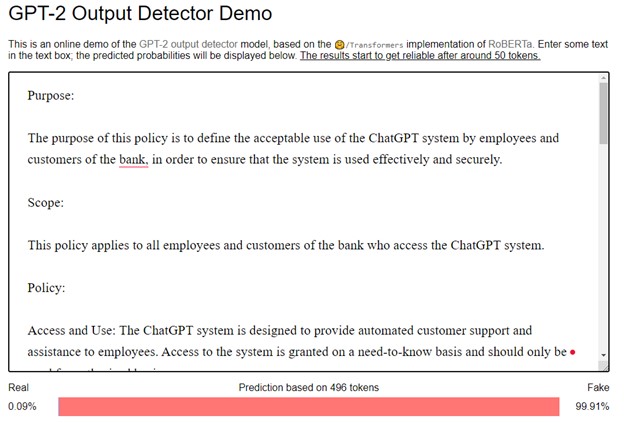

While bankers are still coming around to generative AI, students have been using the tool for more than a year. Educators have either ignored the trend, embraced it, or fought against the application. OpenAI has released the AI Text Classifier and Output Detector for those in the latter category. Princeton University released GPTZeroX, and Stanford released DetectGPT. All of which will do an impressive job at scoring and alerting you for AI-generated text.

We had ChatGPT 3.5 Turbo write a bank policy around the use of ChatGPT, and it did an admirable job both generating it and detecting it with 99.9% accuracy.

The Larger Issue for ChatGPT 3.5 Turbo for Banks – The Fall of Voice Authentication at Banks

Because ChatGPT 3.5 Turbo was trained on a large chunk of the human-driven content on the internet, it is one of the more advanced, human-like large language applications out there. But there are others, many for specialty applications such as creating videos, business graphics, logos, marketing messages, and many others.

Recently, SouthState Bank’s Chief Data Architect, Kunal Das, put together a deep fake (below) video utilizing generative AI to create the script, produce the video and mimic the voice. The content of the video was to express the support of PowerPoint presentations despite this author’s intense dislike for the activity. While the work product was funny and proved a point, it was also scary.

The use of deep fakes is now of sufficient quality to where synthetic speech can imperceptibly mimic a person’s voice tone, syntax, word choice, and delivery with only minutes of training. Cybercriminals have already used this new breed of phishing scam to talk a CEO into fraudulently transferring $240k. Whitehat hackers broke into HSBC and Scotiabank telephonic banking systems last year to show the vulnerabilities many banking systems have (HERE). It’s now clear that banks can no longer rely on voice authentication alone.

Putting This Into Action

While AI does not come without its ethical considerations and risk, generative AI tools, like ChatGPT 3.5 Turbo, need to be explored, analyzed, and understood by bankers because of their potential.

While material risks exist, the case for bringing an in-house application where you can control the training and the output currently appears to be within most banks’ risk envelope and likely worth the opportunity, particularly for utilizing this technology to help employees deliver better productivity and an approved customer experience.

In the coming months, look for more experimentation by banks and more information here on the risks, opportunities, and skills needed in this new age of banking.