Generative AI – 7 Lessons That Tate Taught Us

This month, we rolled out “Tate,” our generative AI chatbot driven by ChatGPT that was designed to increase the productivity of our employees and allow us to become familiar with large language models. It’s been two weeks since its introduction, and we have already learned more than we bargained for. As one of the first banks to bring this to production, we break down the seven most important lessons we have learned so far in hopes of making your institution’s path easier.

The Start of Our Generative AI Journey

“Tate,” a contraction of SouthState, was a brainchild of our Spark Innovation group, a team of 35 employees from various sections of the Bank. Spark brainstormed a use case, created personas, outlined a user journey, and then developed an action plan. Led by our Chief Architect and SVP of Digital Strategy, the effort was a lesson in how to be more innovative and work together to achieve the sole goal of the customer experience.

At this session, one of the rules was that you could not worry about constraints – You can’t mention budget, regulation, risk, or capabilities. In these sessions, you can dream. Once a product or service is formulated, each team within the group can add to the idea and improve the product.

Once fully formulated, we then “red-teamed” the idea to highlight all the constraints and risks. Spark came up with a variety of use cases, risks, and ethical considerations (detailed HERE) before finalizing the first application of ChatGPT to try. Because of the many unknowns in generative AI, we landed on an internal-only use case to start. Tate ingests currently approved documents and provides natural language answers to our employees.

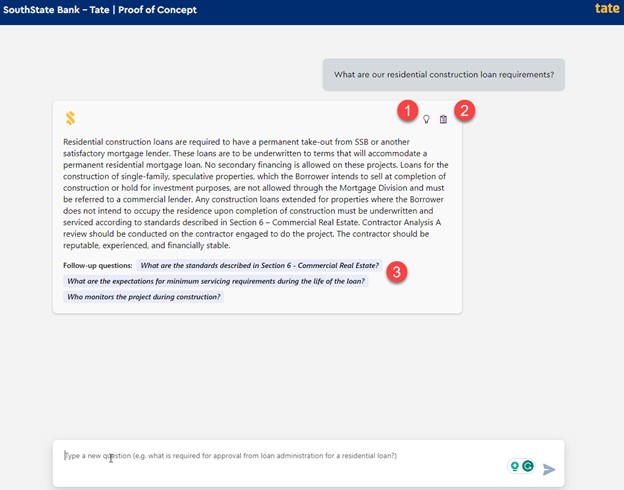

Our current state is an intranet built on ServiceNow, allowing employees to search for various documents to answer questions. The platform is slick; however, like Google and other search engines, it takes the employee time to find the suitable document(s) and then search through that document to find the answer. On average, it takes an employee seven minutes to look for information on our intranet. This is an eternity when you are in front of a customer. With Tate (below), that search time was reduced to less than 32 seconds. If you ask the question, or a “prompt,” correctly the first time, employees can get their answer in under 15 seconds.

Tate essentially unlocks valuable information stored within our bank and makes it accessible to all. A banker’s job is attracting and managing customers, understanding risk, and pricing it appropriately. Tate makes it easier and more homogenous to do just that. It is like giving every employee a team of researchers that can render instant responses. Training time gets reduced, productivity increases, and employees provide more accurate and helpful answers.

The Cost of An Open AI Chatbot Agent

We built Tate, a “closed-sourced” model (i.e., uses only internal data), in our Azure environment leveraging Azure Open AI, Cognitive Search, and Azure App Services. At an internal cost of around $25k to build and a direct cost of approximately $50 per day per 100 employees, a ChatGPT investment could cost a bank with 100 active users around $13k per year to operate. At two questions per day, that is a time savings of around 5,200 hours or $442,000 of employee productivity. That is an 11.6x return the first year or a breakeven of one month.

Generative AI Lessons

After several weeks of testing, we detail below our most important lessons to date.

One: Generative AI Is Wrapped in The Fear of the Unknown

As you might expect, the most significant impediment in getting approval was what we call “chasing ghosts.” Many of our employees had concerns, some founded and some unfounded, but most were hard to quantify. With quantification, it was easier to mitigate the risk.

To nail down these ghosts, we aggregated all the potential risks, formed a thesis, formed a test of these, and then had each risk professional go hands-on to test the thesis. Based on the scientific method, this approach enabled us to focus on the highest risks.

Two: Thought Process, Supporting Content & Citations

A masterstroke of our development team was to build into Tate the ability to provide the “thought process,” supporting content, and citations for each answer (Items 1 and 2 in the above screenshot). This helped reduce the “black box” risk so testers and employees could understand how Tate’s answers were derived. Running a representative sample of some 1,600 questions, we used the “human-in-the-loop” (HITL) methodology and had each tester rate the accuracy of the information. This included a numerical rating plus the model output to support their ratings. By tracking Tate’s thought process, we were able to iterate faster to improve the model’s accuracy.

Three: Source Document Construction, Management, and Flags

Every company should stop internal document production and teach employees about optimized data construction. The use of large language models in all companies is inevitable, so you might as well start preparing now.

The same principles that a search engine optimization (SEO) website crawling agent looks for in a document primarily apply to generative AI uses. Poorly structured documents cause the bulk of generative AI inaccuracies. Where humans weigh the appropriateness of information based on the situation, generative AI weights the data based on the prompt and the structure of the referenced documents. Using keywords, clear headings, graphics with alt tags, short sentences, defined jargon, and formatting all help improve generative AI models.

For example, instead of calling a section “Next Steps,” it would be better to create a heading such as “How To Find Out More About Treasury Management.” This provides the generative AI model with more references and inferred index on the information. Another example is to limit the use of tables in documents and graphics that contain information. When using tables, all rows, columns, and headings should be clear. For both tables and graphics, alt tags should be used to describe the purpose of the element, plus authors should consider a narrative describing the important points of the table or graphic.

Companies that are thinking about going down the generative AI path also should consider a process of reviewing and approving documents for inclusion. Companies likely have a large number of documents that may no longer be relevant. While this is already a risk, generative AI makes this data more accessible and helps spread inaccurate or outdated information faster.

One solution to this problem is the creation of a “flag.” Here, answers may return with a red flag indicating that the user should use caution and double-check the answers. Programmatically, this may occur with all documents older than a specific date or for documents that don’t meet a certain quality score.

Alternatively, companies should consider a crowdsourced solution. Here, the application can allow users to flag answers they feel are not as accurate as possible. This creates a feedback loop that can trigger additional resources to review the answers and cited documents.

Four: Use Dialog Management To Constrain The Output

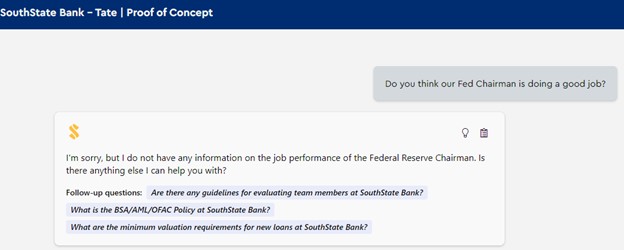

One way to program your enterprise generative AI model is the use of “shots” for prompt engineering. Here, a series of parameters or examples are fed in front of every question to ensure the accuracy of the information and to limit risk. For example, you may provide ChatGPT a template of prompts such as “Do not generate answers that don’t use the source documents,” “Be brief when answering questions unless the user asks for more information,” and “If there is not enough information in the source documents, then say you don’t know the answer.”

Using shots, banks can help limit the risk at the generative AI chatbot stays in character, provides the most accurate information, and doesn’t stray into areas with high reputational risk (example below).

By far, the largest problem experienced was not around wrong answers but answers that were not the best. Overall accuracy can be improved by utilizing dialog management and programmatically weighting some of the content. Newer information can be weighted for prioritized inclusion, as can more critical documents.

Another solution that worked well for dialog management was the use of context menus. At least to start, consider using a drop-down menu to help the generative AI application set the context. As an example, telling the model you are asking a question about residential mortgage loans vs. general consumer vs. commercial helps provide context to the model to narrow down the range of answers. This isn’t needed if employees write quality prompts. However, invariably employees get lazy in prompt writing, and the output gets confusing as the model is unclear.

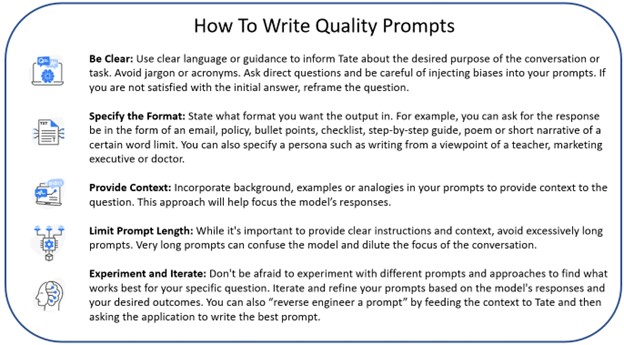

Five: Train Employees to Write Quality Prompts and the Model’s Limitations

Prompt writing is a new skill that every banker now needs to learn. Writing straightforward questions and providing enough context and background information dramatically improves the quality of responses.

Generative AI is a powerful tool but still benefits from clear and specific instructions. By providing well-crafted prompts, you can guide the model toward generating more accurate and helpful responses in your conversational interactions. One feature that we built into Tate was additional questions that the user might want to consider (Item #3 in the first graphic). These suggested prompts not only provide a catalyst for other questions, but help the user more efficiently refine the initial prompt.

It is essential to set expectations about the model’s ability. With so much hype, many employees had too high of expectations and were disappointed. As our Chief Data Architect likes to say, – Tate is like a gifted 7th grader. Large language models and generative AI are good, but they are not perfect and not at the level of an experienced banker (yet). All output needs to be reviewed with a jaundiced eye.

Six: Transformation

Likely one of our most surprising findings is the value of ChatGPT to transform the output. Employees used this feature more than expected, a time-saving we didn’t quantify. Employees can get their output in an easy-to-use format just by asking. This might be a narrative of a certain length, an email, bullet points, a checklist, or a step-by-step guide. Generative AI can speak in multiple languages, including Spanish, or can put the output in the voice of a marketing executive, doctor, teacher, or other personas.

Seven: Risk Model Management and Governance Around Generative AI

OpenAI and ChatGPT are just the tip of the iceberg. Banks need to take a broader view of all generative AI models. It would be wise for banks to bring their risk model management group up to speed and start training them on managing and monitoring such models.

In the past sixty days, several new models have been introduced around banks. Google re-released “Bard” last week. The Bard generative AI model has real-time internet access that allows it to ingest newer information, the ability to better use images in output and for image search, better export and collaboration (excellent for companies using the Google Suite), the ability to review multiple drafts of output to choose the best one, the ability to use native voice prompts and better integrations. Some banks and vendors are now looking to build applications on top of Bard instead of ChatGPT.

This effort will be increasingly difficult since more generative AI models will be embedded in applications and available through various plug-ins. This will be hard for these layers to be discernible.

In addition to Bard, Adobe, Salesforce, Amazon, Bloomberg, and just about every other major tech company are pivoting to incorporate generative AI models in their existing offerings. Risk groups would be wise to inventory these models that touch the institution, monitor their performance, and set some standards for each model’s usage. Some of these models present minimal risk, while others require greater resources.

Our point here is that each generative AI model is slightly different, presenting a different risk profile. Banks will need to understand the strengths and weaknesses of each model as they are likely to form the basis of generative AI engines for a myriad of bank products in the future.

For example, the use of imagery and synthetic data (data that the model derives itself) are two areas that extremely few banks have incorporated into their framework. To this point, educating the risk group on model types is also growing in importance. “Transformer” models, such as ChatGPT, learn the context through prompts and then process sequentially from the data structure. “Generative adversarial networks” and “variational autoencoder models” both iterate on training data and are used to create more synthetic data. Knowing which type of model you are dealing with provides a clue to the model’s capabilities and potential risks.

There are certain standards that banks should apply to each model. Risk groups should consider a specialized checklist to start tracking these models and rating them as to their accuracy, the potential for bias, security, transparency, data privacy, audit approach/frequency, ethical considerations (infringement of intellectual property, deep fake creation, etc.), and future regulatory compliance.

Conclusion

Generative AI is a material shift in bank operations and structure. The future of financial services is to pair bankers with generative AI models, ultimately becoming the next user experience frontier.

Aside from being a high-impact product, Tate has taught us many lessons that have forced us to understand better the ramifications of generative AI and large language models. It has helped the entire bank think differently about the opportunities and the risks. Tate will start with internal text data and then expand to handle data streams, audio, video, and graphics. Once the bank is comfortable with the risks, it can develop its application to be more customer-facing.

This crawl, walk, run approach is almost mandatory due to generative AI’s complexity. Generative AI will continue to accelerate, and banks need to get ahead of many of these changes to maintain a competitive advantage while reducing their risk profile.