Our Custom Development Solutions group has been working to revive our Tech Challenge coding competition culture and ultimately decided why re-invent the wheel? A lot of our client work is focused around application modernization, so why don’t we modernize an old Tech Challenge application?

Background

In 2008, Bryan Dougherty, now our General Manager, created the original Tech Challenge. The flow of the game is based loosely on a childhood-favorite, battle-style board game; sink all of your opponents’ ships to win the game. A few enhancements were added to make the gameplay more exciting. Just like the gameboard, the battlefield is a grid. However, unlike the classic game up to 16 players can battle in the same game. Each team’s fleet is assigned to a region of the battlefield.

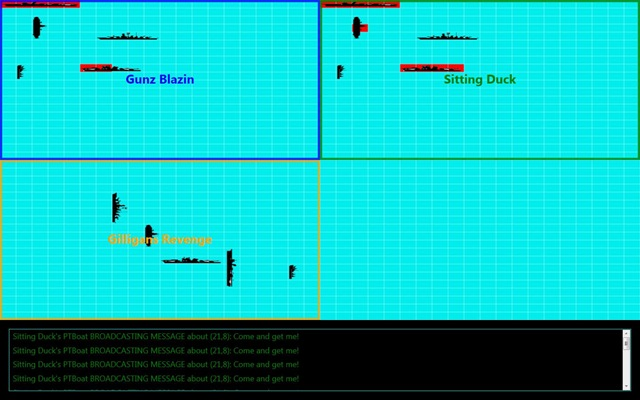

Below you can see a sample screen shot of a game with three teams. Blocks in red indicate damage. The bottom of the screen displays information such as messages, order results, or ships sunk.

Gameplay

The simple rules of the game are:

- Each player must set their fleet. For a fleet to be valid, it must contain one of each type of ship.

- The game requires a fleet to be set within a 20 x 20 region. If a player positions a ship outside the region, the player will be kicked out of the game.

- Stacking ships is disallowed. The player must not place a ship in such a way that it will overlap with a ship they have previously placed. If this occurs, the player will be kicked out of the game.

- Each turn consists of an order that is pulled per player.

- Orders must be one of three types: attack, reconnaissance, or broadcast a message.

- Players are able to execute any order if at least one ship remains in the fleet, once all of their ships are sunk, they can no longer issue orders.

- The game will not protect you from errantly placed orders. If you attack your own ship positions they will register as hits. If you send order coordinates outside of the max battlefield size it will count as a turn and miss.

- After an order is received from a player and processed, the subsequent order and order results will be sent in a response to each player.

The players’ fleets will be registered at the start. When all fleets are registered, the game will return a list of players in the game and their start and end board coordinates. This should provide the necessary information for a player to determine which regions of the board to attack. The game will start and absolute coordinates will be set for each ship. The absolute coordinates are the coordinates that correspond to the shared board that contains all fleets. The game iterates through each player for their next move (attack, broadcast, or reconnaissance), calculates the result, displays it on the UI, and then returns the result of the move to all players. Two enhancements were added to the game to increase the enjoyment and complexity: broadcast and reconnaissance. Each player submits one move per turn. Only the attack command inflicts damage on an opponent. Minimal updates and enhancements were made to the overall gameplay logic during the application modernization.

The broadcast command allows a player to broadcast a message to every other fleet. The message is displayed on the UI and then the broadcast message is returned to each player.

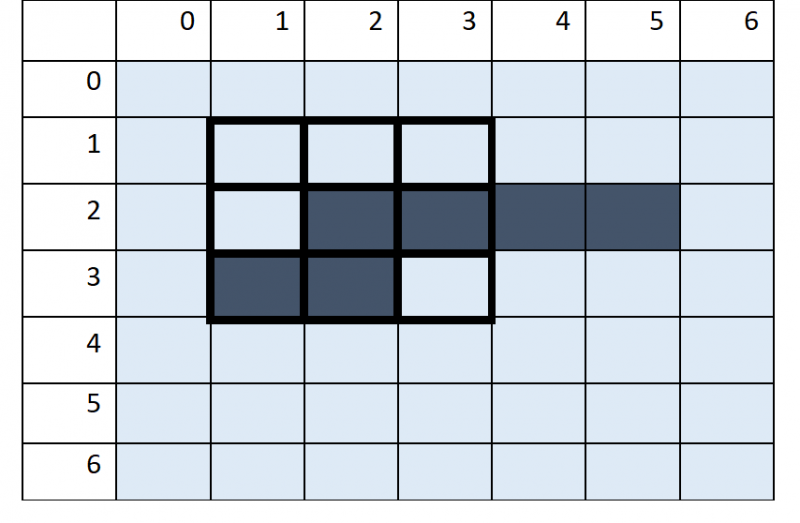

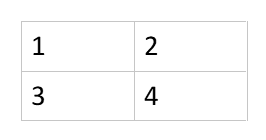

The reconnaissance command allows a player to scan a specified 3×3 area starting with a specific coordinate. The scan will start at the specified coordinate and move top to bottom, left to right. If a ship or ships are found within the 3×3 area the game will return the coordinate of the first space where a ship is found only to the player that submitted the reconnaissance command. All other players will only be notified that a recon happened with no coordinate information. An example of a potential recon is shown below. Here a recon was ordered at (1, 1). The recon begins there and moves top to bottom, and then onto the next column. Once the recon scans (3, 1), it has found a ship and stops any further scans in the recon area regardless of if there are other ships still in the area.

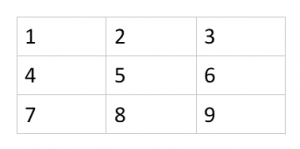

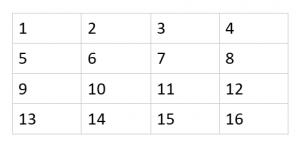

Board / Player Layouts:

2 players: battlefield width – 40×20

2-4 players: battlefield width – 40×40

5-9 players: battlefield width – 60×60

10 – 16 players: battlefield width – 80×80

Application Modernization

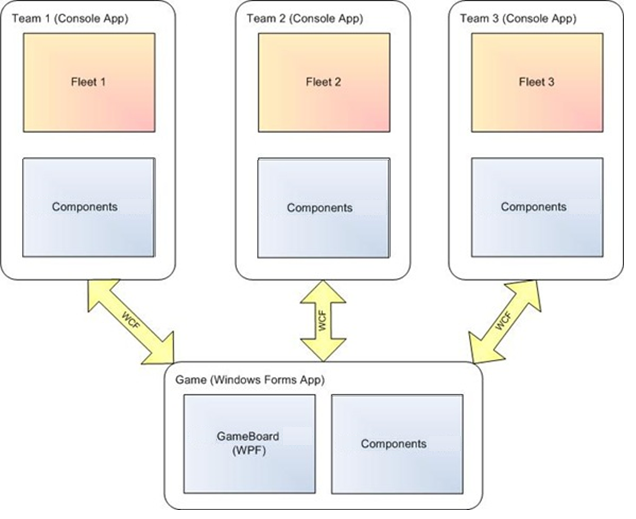

Original Architecture

The original game was built in 2008 using WPF and WCF. The application was divided into three parts. The Components project was a shared assembly that contained the classes that managed the communication and the logic of the game as well as class definitions for all of the ships (i.e. AircraftCarrier, Destroyer, etc.). The Gameboard used WPF to display the action on the battlefield. Finally, console applications were built by each team wishing to compete.

Application Modernization Approach

We tried to look at the Tech Challenge application modernization in many ways as we would a client project. First we needed to understand the problem and determine the business goals of the project. The original game was 12 years old, wasn’t utilizing a lot of modern technology, and didn’t lend itself well to an agnostic player approach. We wanted to run the Tech Challenge (the sooner the better) where a player could be built utilizing agnostic, modern, and relevant technology to our current business space. Updating the look and feel was less important as we liked the retro vibe the game UI had. We created a phased approach to realize change and value as soon as possible while enhancing the framework to support future updates.

Major Decisions

We decided to limit major changes to the gameplay logic and the WPF Desktop App UI. The WPF UI only needed to be updated to receive the player commands from a different method than the WCF listeners/callbacks. The WPF UI changes were limited to update the way the system received a player command from an API call versus a series of WCF listeners/callbacks. The gameplay logic was moved into a WebJob and the respective player command calls were updated. The gameplay logic itself stayed the same, but the method of connecting into each player and the back and forth communication were updated. The updated WebJob utilized player URLs in the configuration file to initiate the HTTP client connections and the listener/callback methods were replaced with GET/POST calls to defined endpoints.

We wanted to be able to store the games to re-run or process at a later time. The WebJob stored each game command in a json file saved to blob storage. The json file was in the same format and order necessary for the UI to process the game. This allowed for auditing of played games as well as the ability to re-run previously processed games through the UI and bypassing the WebJob if desired.

We wanted the players to be technology agnostic. We wanted people to experiment with different technologies and dip their feet into something they weren’t necessarily familiar with. The entire gameplay is contained in two GET calls and two POST calls. As long as a player can expose an http://baseurl/{methodName} endpoint for the four calls and adheres to the correct data models, any technology can be used.

We also wanted the barrier to entry to be low to encourage participation. We provided basic and workable player samples in four different technologies: Azure API App, Google Cloud Endpoint, AWS API Gateway, and Python hosted on PythonAnywhere.

Result

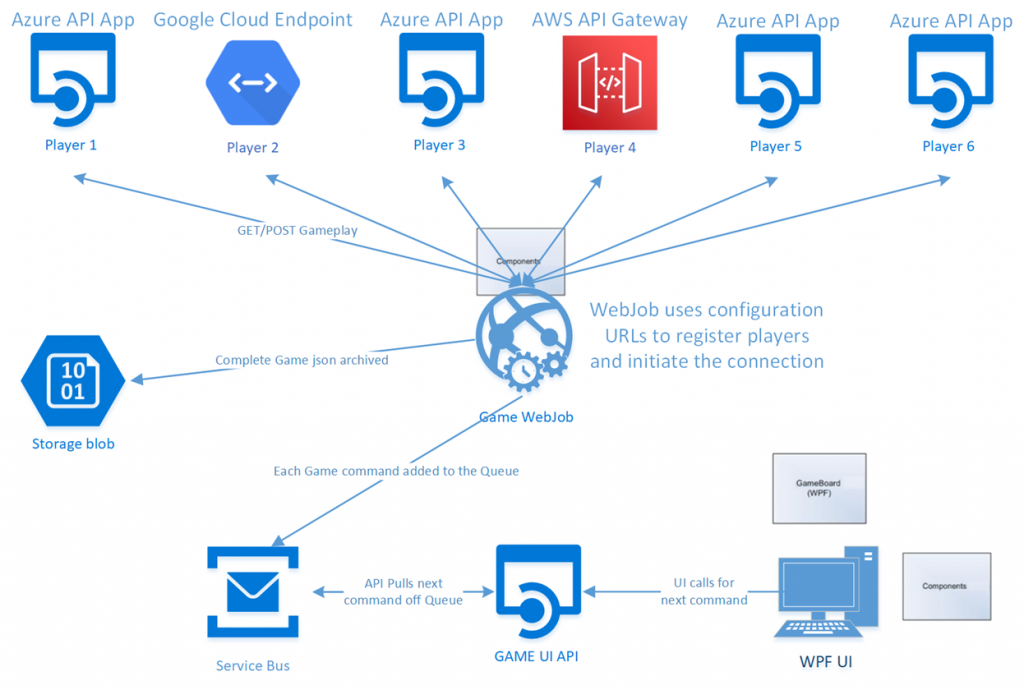

As depicted in the diagram below, the WebJob was created to run the game logic and connect into each player through an HttpClient connection for the game commands. The WebJob sends each command processed to the Service Bus Queue and saves a copy into the game blob file. The WPF UI was updated to pull the next game command from an API. Each GET API call would pull the next game command off the Service Bus Queue until a game complete status was received. The UI display was not altered in the initial phase.

Challenges with the Application Modernization

Decoupling the game and player logic from the UI. Our team took a crash-course on WPF, working to understand the UI and ensure it would be decoupled correctly. It took some initial work to determine where we wanted to decouple the connection and how much would live in the WebJob vs remain in the UI desktop app. One of our extensibility goals was to easily swap out UIs in a future phase. So we moved all of the game logic into the WebJob. This allowed the complete game to be run and processed without a UI. We limited the UI functionality to strictly displaying player moves on the screen as they were received from an API source.

Constraints around testability of the system. In order to test the full game end to end, we needed two players that were smart enough to actually finish the game. As the focus of the application modernization was on updating and moving the game logic to the cloud and testing it worked, initial player versions used either randomized attacks or basic left to right top to bottom traversal of the grid (which could result in 400 turns in a two-player game alone). The early games would take anywhere between 5-15 minutes to fully process in the WebJob and then 5-15 minutes to watch on the UI. If we wanted to make one small change on the UI and redeploy, we’d have to start the whole process over again from the WebJob. One testing cycle could take 30 minutes or even worse, time out. When testing 3-4 player games that time could easily double or triple. To alleviate this constraint, we updated the UI and instituted a configuration setting that allowed us to process a saved file game either back through the Service Bus Queue or through a local json file. We also needed to support end user testing once the Tech Challenge was started. We wanted to make it easy for individual testing of player UIs and didn’t want people to have to pull down the codebase, rebuild it, and run it nor did we want people to update the base game code. We created a standalone app executable as a test harness for player logic testing. A download of the .exe and a simple configuration update to point to the appropriate URL was all the setup necessary to test a local or deployed player.

Original Software and Modern Technology Integration. We ran into a few issues placing modern technology on top of a UI built in 2008. The original game wasn’t designed to manage asynchronous API calls as it was built on .NET 3.0 and async wasn’t included in .NET until 4.5. During the application modernization the initial iterations of async/await weren’t completely implemented and async/await exceptions were getting lost on the stack. The UI would fail in the background while making a call to the API and it would just freeze without a trace of what occurred. There were also some threading issues with non-async context calling into async code improperly. If the execution ended in the caller it may not get the results from what it called into and it may halt execution all together.

Player API considerations

Storage. The gameplay is exposed through APIs, so some element of state needs to be added to manage the game. How are you tracking game statistics? Do you respond to other players’ hits? Do you track every opponent at the ship level or just a total number of hits? How much is worth the initial effort? Phased approach thoughts: do you need to track every game and every player faced? Probably not for a run-once tournament. If it was a season and you played the same players multiple times then it may make sense to track their ship positions, etc.

Gameplay logic. Is it better to focus your time on head-to-head or multi-player battle? Is reconnaissance worth the non-attack turn? Do you base your next attack on other players’ previous moves, i.e., Player4 hits Player3 at (3,3), as Player2 do you attack (3,4) or some surrounding coordinate as your next attack move? This is obviously more helpful in multi-player scenarios as a head-to-head match would just be your last call.

Tech Challenge Tournament 2020

16 player APIs were submitted. Two tournament styles were setup, a head-to-head bracket style tournament and a multi-player battle royale tournament.

Head-to-Head Tournament

The head-to-head tournament was randomly seeded and played in a typical bracket style. One of the first round games ended in a stalemate as neither player accurately attacked the other player, which resulted in a game timeout. Since both players failed to attack the opponent, they were disqualified from the tournament. To fill that spot in round 2, a redemption round was implemented consisting of the top 4 teams that lost in round 1. The winner of the redemption round re-entered the tournament for round 2. The tournament winner was C & C Torpedos commanded by Bryan Dougherty.

Battle Royale Tournament

The battle royale tournament was broken down into two mini tournaments. The first mini tournament was a five-player battle. Two five-player games were held in the first round and then the top five finishing players out of those games played in the five-player game finale. The second mini tournament was a one-game, ten-player battle. The winner of both mini tournaments was Squadron 42 commanded by Evan Glanz.

The first phase of the Tech Challenge Application Modernization successfully completed fulfilling three main goals: Hold a fun and engaging, low-barrier to entry Tech Challenge in the first half of 2020, support technology-agnostic players, and deliver an extensible solution where the UI can be easily swapped out in a future phase.