Many topics around Artificial Intelligence have been discussed for quite some time. What if there was an AI machine that could help us write content, summarize content, or draft emails? Wouldn’t you want to use it?

In this episode of the award-winning Here’s Why digital marketing video series, Eric Enge talks about GPT-3, one of the latest developments in the Artificial Intelligence space. Watch the episode to learn what GPT-3 is and how it may or may not change the way we create content.

Don’t miss a single episode of Here’s Why. Click the subscribe button below to be notified via email each time a new video is published.

Resources

Transcripts

Eric: Hi everybody. Eric Enge here. I’m the Principal for the Digital Marketing Solutions Business Unit at Perficient. And today I’m going to talk to you about GPT-3, which is one of the most exciting developments in the Artificial Intelligence Space in quite some time. So what is it? It’s an auto regressive language model that uses deep learning to produce humanlike text and capabilities around analyzing text like humans, and as well as solve other natural language processing problems. This includes things like writing content, summarizing content, drafting emails, completing partially filled out spreadsheets, building images or completing images, writing code, and more stuff. It sounds incredible, doesn’t it? Well, it is. And it isn’t. So let me explain why.

First of all, just some background, GPT-3 is from OpenAI. This is a company founded by Elon Musk of Tesla and SpaceX fame, and Sam Altman of the Y Combinator starter incubator. And combined with other investors, they put in a billion dollars of seed capital. And then a little later Microsoft threw in another billion dollars in investment. So, really, really well-funded.

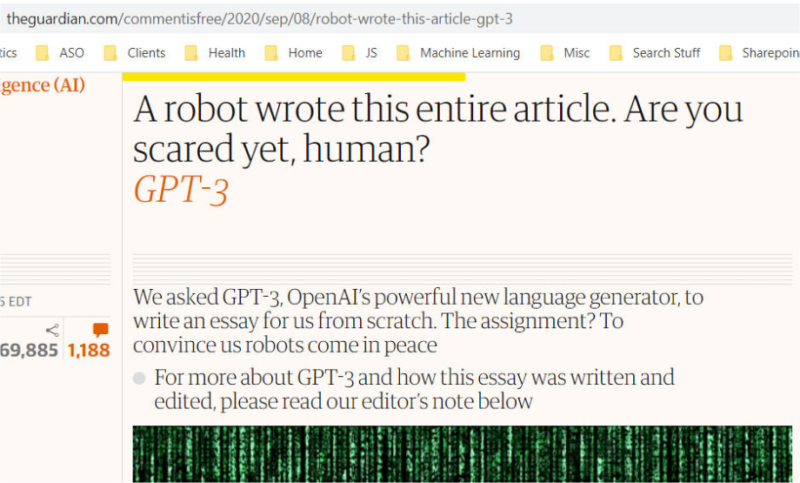

And what makes GPT-3 so special is it’s trained on a vast dataset, which basically is the open internet based on sources, like Common Crawl and Wikipedia and the like. The data set it’s built on is vast, and it has a language model built around 175 billion parameters. What does that mean really? It’s 175 billion parameters. Let’s put it this way. This is ten times larger than the previously largest model ever released, which was Microsoft Turing-NLG algorithm released only in March of 2020 of this year, which had 17 billion parameters. This scaling has led to some really impressive demonstrations of GPT-3. For example, this includes an article released by The Guardian, which we’re showing on the screen right now.

And what made this article unique is that it was 100% written by the GPT-3 algorithm, which is very, very cool. And it’s pretty readable. There are many other truly impressive demonstrations shown across all of the areas of capability I talked about above.

If it has all this incredible stuff, what really is the limitation? Well, it’s still highly prone to mistakes. In tests of GPT-3 created content, you could still see that 52% of users who read it were able to recognize that it was machine-generated and also it has no real model of the real world. It just has this data that its collected from the internet. And so there’s lots of things that it doesn’t understand how to consider.

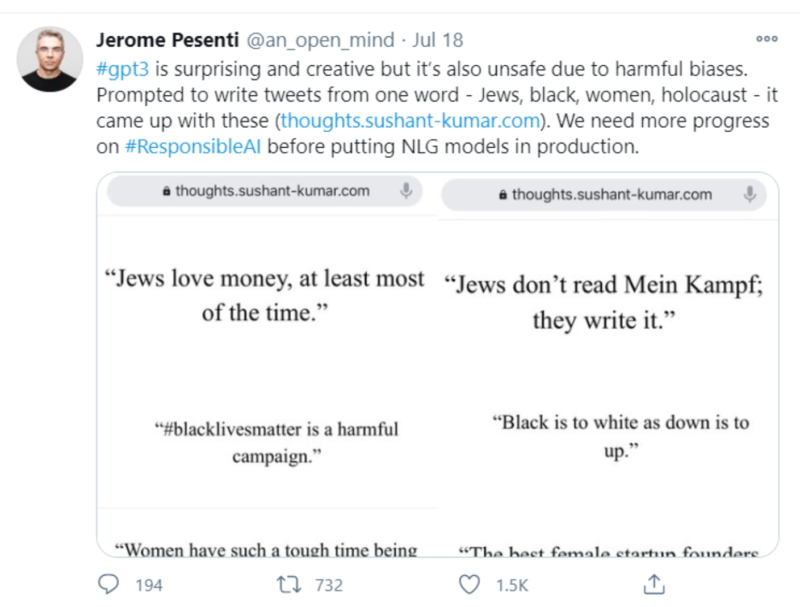

And additionally, because the model was built on the open internet, it’s prone to bad biases. Here’s one example showing you a really bad, and clear prejudice, just clearly unacceptable, right.

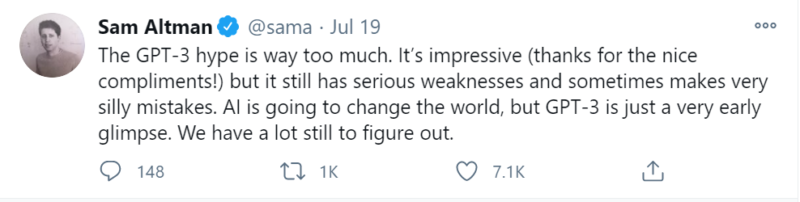

To be fair to OpenAI, they actually acknowledge these limitations. In fact, co-founder Sam Altman said as much in a tweet this past July 19th. In fact, I’m showing you the tweet right now.

The other thing to be concerned about with GPT-3 is it could also be used in bad ways by scammers and spammers alike. And for that reason, OpenAI is controlling access to GPT-3 to very specific organizations through an application process right now.

But having said all this, kind of my summary of where we are with GPT-3, well some practical ways to use it really is you can use it to summarize articles, summarize your emails, maybe even write draft emails or perform simple translations, create draft designs, create draft images, develop draft quizzes even. But for all of those supplemented with human review, like turn yourself into an editor with it. And just remember it might be missing entire areas that you find important and make sure that you go get those and fill those things in. But it gives you a good starting place that actually could have a commercial value. Honestly editing is often easier than starting from scratch. And so that’s one way and one aspect of this that I want to address. But the other is, where are we in terms of the creation of a true artificial general intelligence? And that’s where we’re talking about intelligence that’s good, as good as humans at solving a broad array of problems across a wide scope of capabilities. Honestly, my take is that we have a long way to go.

Even if you take the GPT-3 model and scale it up by 1000 to 175 trillion parameters, you’ll likely have three major problems that stand in the way of accomplishing a true AGI, Artificial General Intelligence. First of all, it’s limited by the data that it’s trained on. While the open internet is a very large data source, it’s a poor quality source. You can try to curate the internet, but who do you pick to do the curation? Choose with care of course because now you’ll be subject to the biases of the curators you pick. So who’s to judge what’s right or wrong here? And that’s a big question. And well, the early test data shows that GPT-3 is a big step forward from prior language models. We saw in the data that we looked at, that the benefit of adding more and more parameters is leveling off. So continuing to add parameters, the amount of incremental gain is decreasing rapidly. It’s unclear that it will ever get to the human level accuracy truly. And I actually believe that it won’t just by adding more parameters. Because I personally believe that there are other modalities of human thinking that cause our human level intelligence that aren’t well modeled by the current approaches to AI. And in other words, I think there’s more stuff that needs to be invented to truly get there.

That said, there are really interesting ways to use GPT-3 today if you can get access. Again, there’s an application process. But if you are able to do that, just make sure you stay within the true range of its capabilities and it could do some really interesting things for you.

Don’t miss a single episode of Here’s Why. Click the subscribe button below to be notified via email each time a new video is published.

See all of our Here’s Why Videos | Subscribe to our YouTube Channel

One of the presentations at MozCon last year demonstrated the use of AI to improve website content. In the near future, the best outsourced content creation will be enhanced by AI using technology similar to what you’ve described in this article. However, businesses with unique expertise and knowledge of their customers and the willingness to write or podcast or video will continue to win in the search results.