What is Kubernetes?

In simple words, it is an open source tool for managing high-scale containerized environments, deployments, auto scaling, etc. It was originally created by Google engineers to manage their billions of application containers and auto-deployment processes. After a while, it was given to the Cloud Native Computing Foundation (CNCF), part of the Linux Foundation, for further improvements.

Kubernetes is basically a system that deals with all resources in a cluster and helps developers and system administrators in managing applications, deployments, and containers.

Though the key concepts and configurations of Kubernetes are well documented on its official website, it is still not an easy job for any beginner to configure and manage Kubernetes clusters on their on-premise environments.

Understanding the complexity of Kubernetes configurations and management, some known cloud providers like Google Cloud Platform, Amazon Web Services (AWS), and Microsoft Azure Cloud have launched their own cloud services to deal with the Kubernetes clusters. These services are very helpful for managing Kubernetes configurations and cluster environments.

Basic Concepts of Kubernetes

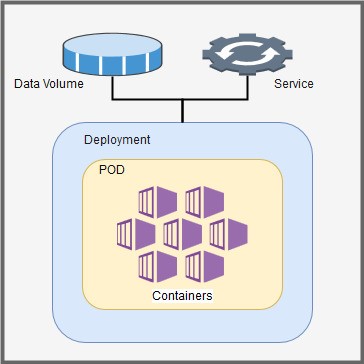

As shown in the diagram above, nodes, data volumes, services, deployments, pods and containers are some important parts of a Kubernetes cluster.

- Node: A node is a worker machine (in an AWS Elastic Compute Cloud (EC2) instance) of Kubernetes clusters that runs your application containers.

- Container: A container is a lightweight software bundle with the required packages and dependencies to run your application.

- Pod: A pod is one of the most essential units that Kubernetes manages. It manages a group of one or more containers deployed on the worker node of a cluster. Kubernetes clusters can have multiple pods and each pod has unique IP address for connectivity.

- Service: By configuring a service in your cluster, you can expose the access of application containers running on different pods within your cluster OR the access to your application containers from outside your cluster network.

- Deployment: Deployment is a set of instructions for creating pods and other required things in your application. Deployment files are like the composer file of a Docker environment. You can manage the specific number of pod replicas by specifying instructions in deployments. Deployment keeps monitoring the cluster to maintain the defined specifications for the pod. Even if you delete any pod manually, the configured deployment takes care to immediately create a new pod as defined in the deployment.

- Volume: A Kubernetes volume is a storage unit that can be configured to provide access to your application containers running inside cluster pods. These volumes can be attached volume of worker nodes, cloud storage like Amazon Elastic Block Store (EBS), Elastic File System (EFS), etc. as per the list of Kubernetes-supported storage services, which you can find on the Kubernetes website.

- Kubectl: Kubectl is a command line interface to manage your cluster environment. It can be any system outside of the cluster environment. You just need to install basic packages and configurations to communicate with your cluster. Once your system is configured, you can perform all the operations in your cluster from the kubectl command line.

Now what is Amazon EKS?

Amazon EKS is an elastic container service introduced by AWS to manage Kubernetes clusters, node servers, auto scaling, and required network configurations on the AWS cloud platform.

There is no need to install and configure any packages on any physical or cloud servers for Kubernetes cluster setup. EKS service takes care of all the required Kubernetes package installations, patching operations, and cluster high-availability management.

To maintain the high availability of a cluster, AWS manages the Kubernetes master servers on three different availability zones.

Working pattern of EKS:

- Provision EKS cluster on the AWS account.

- Once the EKS has been created, deploy EC2 worker nodes for your cluster environment with the help of AWS-provided cloud formation scripts.

- Connect the EC2 nodes with the created EKS Kubernetes cluster from your kubectl-configured system.

- Once the EC2 nodes are connected to the EKS Kubernetes, your cluster environment will be ready to deploy and manage your applications on Kubernetes cluster.

Basic architecture of Kubernetes environment with AWS EKS service:

Note: Architecture may vary according to your requirements and configurations.

Conclusion:

Kubernetes is a very suitable option to manage huge containerized orchestration platforms with minimum resource utilization. It has an algorithm to select a specific node of the cluster for containers deployment using certain rules that helps in utilizing the server resources more efficiently. It also has numerous other features like auto scaling, auto healing, automated rollouts and rollbacks, and replications that provide feasibility to automate deployment and container management.

With the use of Amazon EKS, we can create a Kubernetes cluster in few easy steps without worrying about the installation and hardware management. It maintains the API server and ECD database on three different availability zones to provide high availability. In addition to Kubernetes, EKS also has multiple features for cluster management like Identity access management integration, load balancer support, Amazon EBS for cluster volume management, Route 53 DNS records, Node auto scaling, etc.

You can find the more information about Kubernetes clusters and Amazon EKS on their official websites.